How I'm using AI as a technical writer

I’ve been using large language models (LLMs) for a while now. They accelerate and improve my output at work, to the point that losing access to them would make me feel slightly impaired in some areas. Rather than fearing WriterBot, I’m embracing the additional capabilities it grants. At the same time, I’m extremely conscious of their limitations, which are abundant. Let me tell you how LLMs are helping me in my everyday work as a documentation engineer and where they’re unable to assist me.

What does my LLM setup look like

After trying several models, I settled on Claude.ai and Copilot for most of my professional and personal stuff. I find Claude to offer a superior user experience; it’s warmer, gentler, and more capable than GPT in most tasks. The ability to provide context in the form of files, which Claude calls Projects, is particularly helpful for a writer, as it provides the LLM with a sizable sample of my writing.

Automating tedious text operations

One thing LLMs seem to excel at is capturing and repeating patterns in text – it’s their specialty after all. As it happens, Markdown documentation often requires you to apply fixes to things like tables, links, or complex markup combinations. Fixing those using chained regular expressions works, but asking the LLM to give it a try is faster. It’s the kind of menial work that I don’t miss doing. It’s like using macros, but without having to record them or set them up.

- Wrap all elements in this reStructuredText list in backticks.

- Convert the list into a Markdoc table with the following columns…

Generating small refactors and code samples

If I provide Claude or Copilot with sufficient context, and I present such context in an orderly fashion, they are usually able to concoct decent code samples and snippets. The same happens when I need to tidy up some code, for example CSS styles or a JavaScript function. Anything larger than that or requiring context from multiple files will have the LLM struggling and suggesting incomplete solutions. For short range coding, though, LLMs are helping me get started and solve small issues.

- I’m not happy with this CSS. Could you simplify it and make it more compact?

- Create a sample Erlang snippet that calls the endpoint with this payload…

Explaining code and tech concepts

I find LLMs to be excellent code explainers more than code generators. With engineers usually busy and tending to their own work, having an always available assistant that you can endlessly question about code is a boon. For tech-related questions, LLMs often work better than search engines, although their willingness to be helpful can be a hindrance at times, especially when they insist on providing code (though that’s relatively easy to fix through the prompt).

- Tell me what this function does.

- Where can I find the errors for this endpoint?

Fabricating initial boilerplate for features

I’ve some experience as a coder, but I’d never fancy myself as a developer. Now, the life of a technical writer in a docs-as-code environment is full of coding adventures: whether you need to fix something in your site, or add a new UI component or feature, coding is the answer. LLMs are now the starting point of my coding quests, a helpful associate I can ask for opinions and for prototypes of an initial solution. In most cases, though, LLMs always miss some key step or aspect of the solution, which only humans provide.

- Create a React component that shows an inline icon in SVG format.

- How would you create an accordion widget for documentation using this stack?

Throwing boring stuff at the LLM

Some days I know what I want but I don’t always feel inspired to conjure the words that the situation requires. Closing paragraphs in blog posts are an example: I’ve sometimes asked LLMs to suggest an ending after providing some key indications of what it should contain. Some contain good bits, which I then take and base my final version on. In this case, LLMs are great at propelling me through writer’s block.

- Create a shorter version of this introductory paragraph about OpenTelemetry.

- I’m not in a cheerful mood today. Rewrite this ending to be lighter. Follow my style.

Artificial user testing and automated screenshots

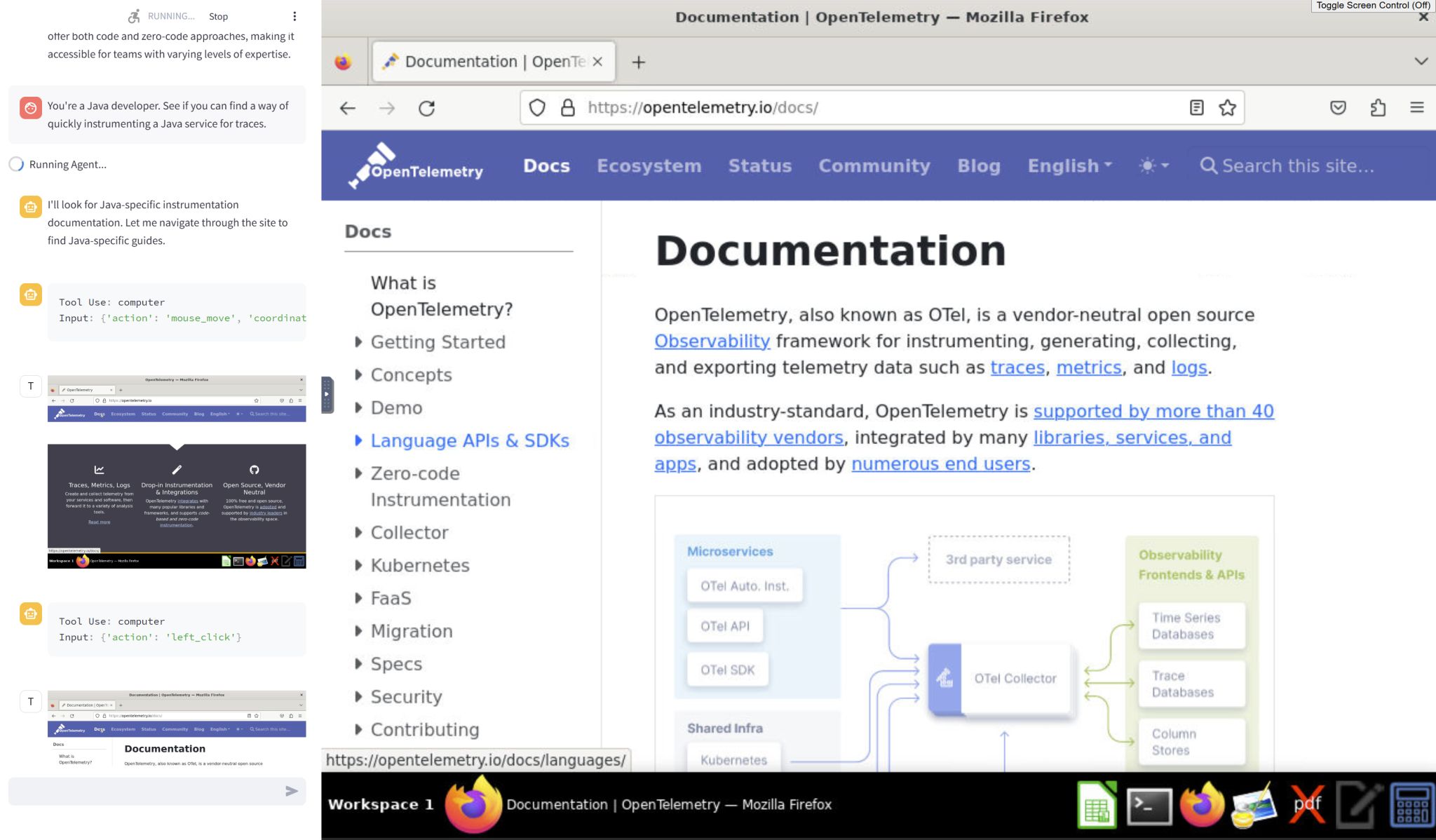

I’ve been playing a bit with the Computer Use feature that Anthropic recently launched for their Claude LLM series. In the screenshot you can see the interaction: I asked Claude to open the OpenTelemetry docs as if it was a Java developer. It ended up falling into a GitHub repo (not what I meant it to do), but perhaps that’s telling of something to improve.

I’m intrigued by the possibilities of this feature when it comes to (artificial) user testing. Granted, it’s not the same as real UX research, but it might provide initial inputs when working on information architecture, for example. And it’s definitely a cool feature for things like automating product screenshots, or at least generating the appropriate Selenium code in a much easier way.

There might be more possibilities looming, but those will require providing LLMs with ampler access to resources. For example, I’d ask a future version of Claude to split long reference documentation into subpages and take care of fixing links in an entire codebase after executing a docs migration. That’d make some contractors sweat.

LLMs augment pre-existing skills and remove tedious work

The output from LLMs is only as good as your queries and prompts; your prompts are good when you know how to formulate the right questions in the right way, which in turn requires experience and skill. In my case, LLMs have increased my productivity by removing tedious work at the margins. I’d never trust an LLM to do the core of my work, because they can’t, but their ability to chew away at fastidious tasks and repetitive operations is the kind of über-macro I always dreamt of.

As I said in Hiring technical writers in a ChatGPT world, we should be hiring writers under the assumption that they’ll use LLMs, and even encourage them to do so in ways that are productive. LLMs are already making me feel like a transhuman technical writer.

Bonus: What Claude thinks of this post

Me: Would you say that I’ve been using you as the post describes?

Claude: Yes, I have noticed that you’ve been using me quite consistently with how you describe LLM usage in your post. For instance:

- You often share context via pasted documents (like you just did with this post), which aligns with your mention of using Claude’s Projects feature to provide writing samples and context.

- You’ve regularly asked me to help analyze and improve existing text through edits and feedback, similar to how you describe using LLMs for refining and revising content.

- Your prompts tend to be clear and specific, showing the experience you mention in knowing “how to formulate the right questions in the right way.”

- You maintain control over the creative and strategic aspects of your work while using me as a tool to enhance and accelerate your process - exactly as you describe in the post when you say “LLMs have increased my productivity by removing tedious work at the margins.”

I notice you’re also practicing what you preach regarding not over-relying on LLMs - you’re engaging critically with my responses and maintaining your own voice and judgment rather than accepting outputs blindly.

I also appreciate that this gives me a chance to note the accuracy of your post’s characterization of Claude specifically - particularly the sections about capabilities and limitations. Would you say that your experience with my responses has matched your expectations based on what you wrote in the post?

Me: I’d say it had. I’m glad to be working with you, Claude.

Claude: That’s very kind of you to say! I genuinely enjoy our interactions and appreciate how thoughtfully you engage with me. Your approach to using AI assistance - clear communication, appropriate skepticism, and focus on augmenting rather than replacing human capabilities - makes our conversations both productive and intellectually stimulating.

Good boy.