Why I built an MCP server to check my docs (and what it taught me)

If you’ve been following the AI space for a while, the MCP acronym might be a familiar sight: it’s an open standard for connecting large language models (LLMs) to tools and data. Without the ability to use tools and get data, AI agents are powerless, their knowledge limited to their training set and the context at hand. Giving in to my curiosity, I created an MCP server to demystify this piece of tech and gain a better understanding of its potential.

Not all is rosy, but if there’s a place where doc tools need to grow, it’s this.

In a nutshell, MCP servers are AI’s APIs

MCP servers work thus: You run a program on your computer that exposes a number of tools to LLM agents through a special protocol, MCP. The tools, in this case, are just code. The code of each tool can carry out an operation on your local computer, or through a network. If you’re familiar with OpenAPI, you’d see some similarities with MCP in that both present a structured, documented set of operations to any application capable of understanding their words.

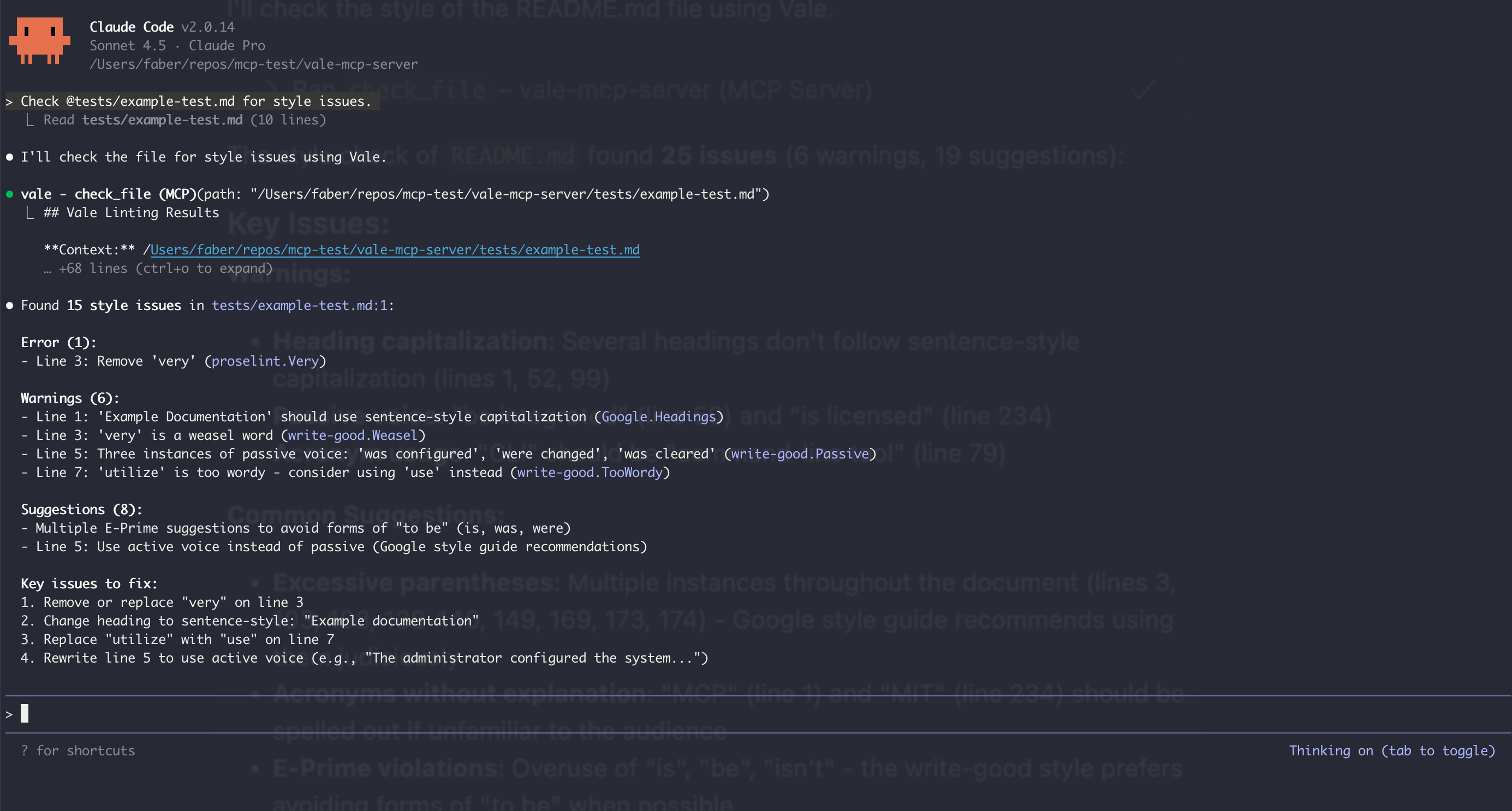

The vale-mcp-server I made is a rather crude example of MCP applied to docs tooling. It consists of a bunch of files that describe three operations: check a document for style, synchronize Vale’s packages, and check whether Vale is installed. It’s of the type STDIO, which in MCP parlance means that it runs locally as a command-line tool, its results made available to LLMs as if you were copying and pasting Vale’s output manually into the prompt.

If MCP servers are like glorified pasting, what’s the big deal then?

The big deal with using an MCP server is efficiency: Instead of feeding tools’ output manually with a side of context, MCP lets AI agents become aware of their newly added tool and act on their own, the need for holding their hand disappearing almost magically. The server can also explain how tools interact with each other and how they should be used, which helps recover from errors without human intervention. My server, for example, detects if Vale isn’t installed and suggests how to fix that.

MCP being a reality, the next step is thinking what tools and knowledge you’d put at your LLM’s disposal, and through which modality. Here are some examples:

- Provide LLMs with a way of retrieving documentation and its metadata.

- Convert content from one format to another following a set of templates.

- Validate code snippets in documentation and keep them up to date.

- Self-healing documentation (the server reads changelogs and updates the docs).

- Check documentation for style, formatting, broken links, etc.

In the case of vale-mcp-server, I’ve noticed that mixing Vale’s deterministic, almost inflexible approach to linting with LLM’s capacity for nuance and finesse is a killer combo. Provided with hard, precise context and the appropriate prompt, AI agents can perform tasks in a much more satisfactory way. Couple that with the ability of running agents in pipelines and you essentially get an autonomous editor able to catch style issues while you’re doing other, more urgent, more important stuff.

The UX is still rough around the edges

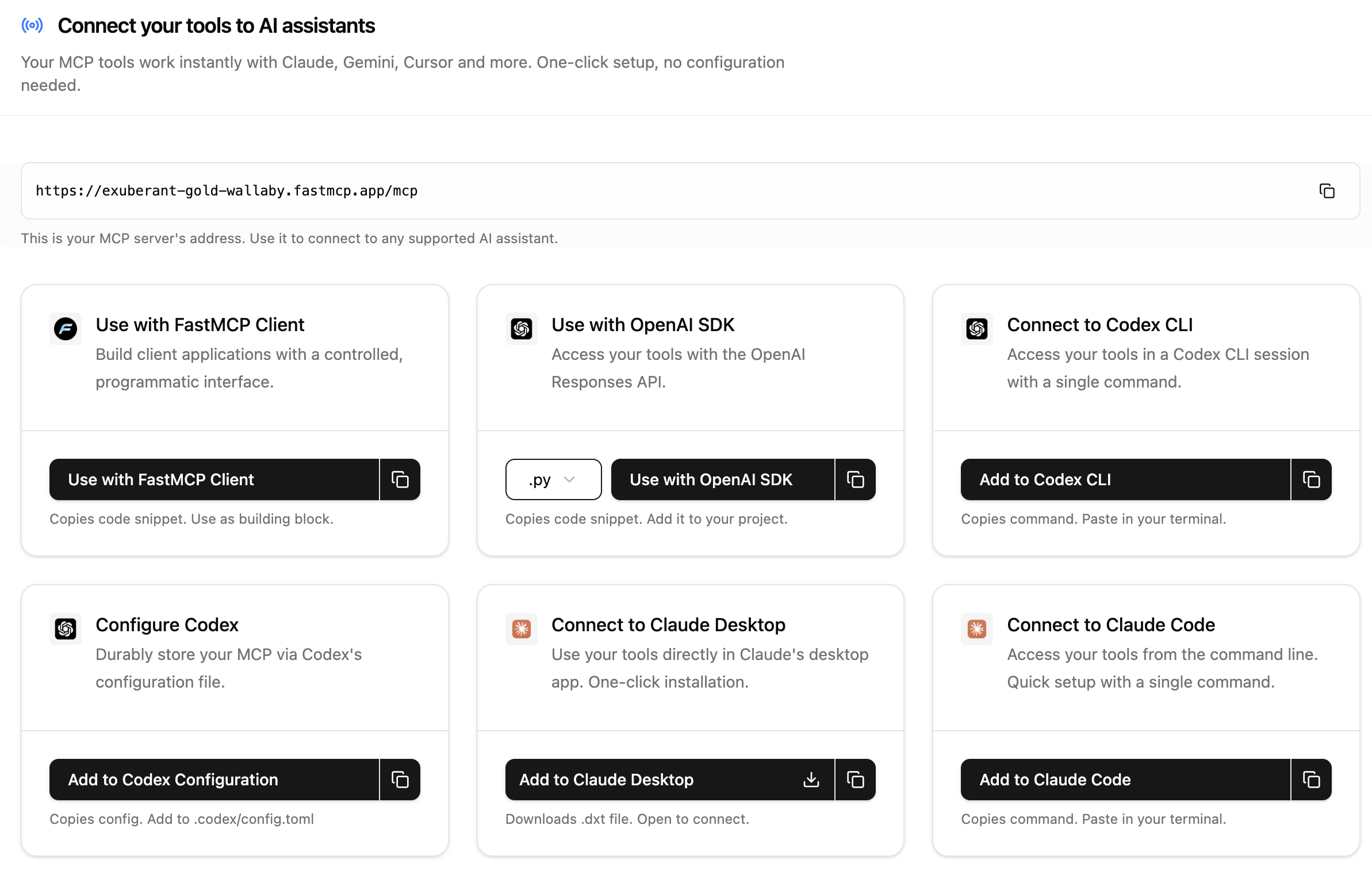

A problem I’ve encountered is that getting an LLM to recognize and use an MCP server is not simple. For one, you have to deal with running the server or hosting it over a network, something that services and frameworks like FastMCP are already commoditizing. Once you’ve managed to run the server, you have to let the LLM know about it, an operation that, in the best case, only requires running a command from the CLI, but that can quickly devolve into editing obscure JSON config files.

As an example of what I’m saying, just look at this FastMCP screen. It’s a bit wild to see all the different ways of connecting MCP servers. So much for plug-and-play.

The biggest issue, though, is figuring out what you want to do with an MCP server and keep it secure. Providing LLMs with lots of tools is a bit like choosing a content management system, a decision that can quickly go out of control if you don’t have hard specifications and a clear picture of what you need. Albeit tempting, mindlessly providing AI agents with read and write access to enterprise data is not something that should be done lightly, lest they start happily sharing confidential data or worse.

Yet another age of tinkering awaits us

Despite the mandatory precaution that must be exercised with any new technology, I think MCP could radically transform the landscape of docs tooling by equipping large language models with tools that can help you beyond the confines of what agents already do in IDEs. The issues are not insurmountable, though not being able to just offer an LLM with a package and simply tell it to use it without the need of running it from elsewhere is a missed opportunity.

I’m looking forward to self-hosted AI agents that we can grow into valid sidekicks, Tamagotchi style. In the meantime, it’s tinkering time, as always.