OpenAI and docs: AI-aided technical writing is here to stay

I get it. It’s hard to read articles about OpenAI’s coding and writing skills without feeling a shiver of neo-luddite panic running down your spine. Especially when one reads passages like the following.

One could even imagine Codex automatically writing documentation for a company or open source project’s existing codebase. […] Codex could fix this documentation gap automatically.

It doesn’t matter that Codex, OpenAI’s latest brainchild, only get things right about 37% of the time, or that it feeds on existing codebases: many writers already wonder when they’ll be replaced by an AI. After all, most documentation, they argue, is boilerplate that reproduces similar patterns over and over. In some cases, we’re already automating those on our own!

Effective or not, I do not fear AIs, and neither should you. I, for one, welcome our new cybernetic assistants. They’re here to make our life easier.

Let AIs deal with the boring bits

Before jumping on your chair, consider this: Only a fraction of a technical writer’s job consists of writing documentation. Most of the time, we’re:

- Researching and testing new features and technologies

- Thinking about the documentation structure

- Building systems to automate and publish docs

- Analyzing documentation metrics and feedback

- Meeting with subject-matter experts

- Refining existing documentation

The items in the list above cannot be easily automated due to their inherent complexity: An AI can write a series of steps based on the underlying code, but it cannot decide where the documentation should go, how the content should be organized, what admonitions should be added and where, when the docs should be published and for whom, etcetera. More importantly, an AI cannot answer the whys, because it’s impossible for it to care.

Now, if there was a computerized minion who could flesh out drafts for me, I’d be immensely happy, as it’d take away the most boring bits of the job and let me focus on what really matters in technical documentation, which is helping people to find answers to their questions through carefully planned content strategies and editing. Engineers would be happy about it, too, as the AI would do most of the SME heavy lifting for them.

When they finally sit down and write, technical writers aim at achieving clearly defined goals in the shortest possible time, because their backlog is neverending. Something similar happens with developers: the deeper their work, the less concerned they are with code—which is often boilerplate anyway, according to some. Besides, writers, too, look at other docs for inspiration, much like devs browse GitHub or StackExchange for code samples; an AI would simply get rid of that additional step.

Enter AI-aided technical writing

The goal of AI-aided technical writing is to assist humans in carrying out repetitive writing tasks faster and with greater accuracy. Only the simplest writing jobs, those that are highly mechanical and add little value, would be fully replaced by software—consider, for instance, the generation of API docs using OpenAPI specs. The stuff that really provides value to readers would still remain in tech writers’ hands, which are almost always quite full, as most writers work in small teams or as lone writers.

The shift towards computer-aided writing is already happening in other fields. Translators, our beloved cousins, have been debating the usefulness of machine translation (MT) for decades now, wary of the consequences of being replaced by neural networks. The end result is that they’ve been integrating machine translated texts in their workflows more and more, while defending the quality of human-edited translations.

Lokalise is a CAT tool that provides MT shortcuts in its user interface

Machine translation is now essential to Computer-aided translation, which facilitates the work of translators. MT is rarely used in isolation, though: ask any translator and they’ll tell you that the MT + CAT combo makes their lives easier, but that no serious client would accept work that has been machine translated with no human in the middle.

In the case of technical documentation, companies should never accept AI-generated documentation as state-of-the-art deliverables. Much like it happens with machine translated text, the temptation of relying on purely automatic systems is strong, but it must be fought back—for example, by showing what kind of silly results it can achieve when left unedited.

It’s our job as technical writers to understand the benefits and limitations of machine writing and to integrate it in our processes when it makes sense.

A proposal for AI-aided workflows

The best way to avoid being buried by change is to lead it. We should start thinking on ways of integrating AI-aided writing into our workflows, so as to harness its power in a productive, sustainable way.

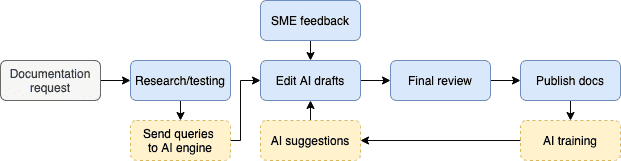

Below is a simple proposal of what an AI-aided technical writing workflow could look like: the AI-powered bits are in yellow with dashed borders. This diagram is partially based on Amirhossein Tebbifakhr’s article on automatic post-editing and machine translation workflows.

Work starts with a request. For example, “Document the new API endpoints”. The tech writer in charge would run an initial research on the topic/problem space and prepare queries for the AI engine in the form of sentences, documentation stubs, or code samples. This step could be automated to a certain degree, so that highly structured requests could be sent directly to the AI (Terminator loves continuous integration).

Next, the tech writer would edit the AI-produced drafts, checking with SMEs and leveraging the AI further to find alternatives. After a final review, the docs would be published as usual, and the edited result would feed back to the AI engine for training purposes, so that future drafts could be even better from the start in that they’d improve in the way they use product terminology and the documentation style guide.

A process like the above would require technical writers to become attuned to their new AI companion, and to learn how to best feed it with questions and source material. Optimizing queries is perhaps the hardest part of the workflow: what chain of inputs will throw the best possible AI-generated drafts as a result? And how do we feed back our ratings? Where do we draw a line in terms of quality?

Sounds like we should give all that a try and see for ourselves.