What's wrong with AI-generated docs

In what is tantamount to a vulgar display of power, social media has been flooded with AI-generated images that mimic the style of Hayao Miyazaki’s anime. Something similar happens daily with tech writing, folks happily throwing context at LLMs and thinking they can vibe write outstanding docs out of them, perhaps even surpassing human writers. Well, it’s time to draw a line. Don’t let AI influencers studioghiblify your work as if it were a matter of processing text.

While I’ve written about the benefits of AI augmentation on several occasions, I would never suggest that LLMs can be used to generate documentation beyond carefully delimited scenarios, such as API docs or code snippets, that is, cases where documentation is produced from clear and concise sources, in almost programmatic ways. Echoing the thoughts in Get the hell out of the LLM as soon as possible, you should limit LLM output to tasks where they truly excel.

On the other hand, when you let LLMs generate entire documentation sets, problems arise. “Oh, you can’t do serious programming, but you can still use LLMs for docs” is a naïve, reckless statement that not only overlooks what goes into crafting excellent documentation but also shows disregard for user experience (because docs are a product, too). To better illustrate my point, let me tell you what’s wrong with docs and docs sets entirely generated by LLMs.

They suffer from a severe case of READMEtitis

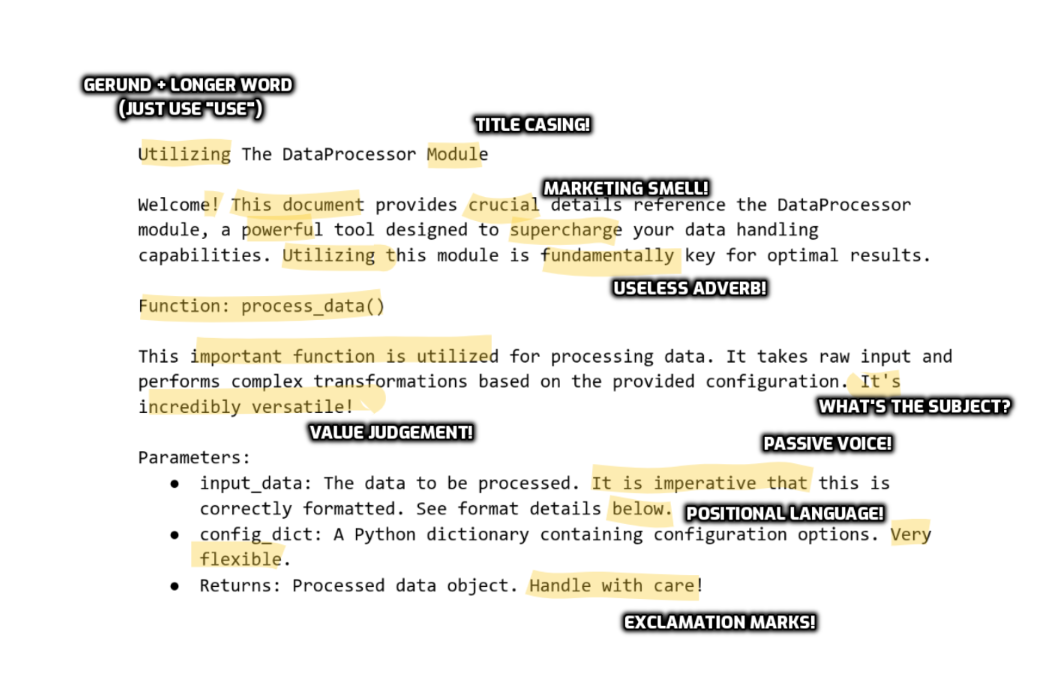

One of the most noticeable issues with LLM-generated documentation is that it reads like a hastily composed README file from a forsaken repo on GitHub. This READMEse dialect is an uncanny frankentongue made of cliches and bad practices that have plagued READMEs for decades. It goes against most style guides, with Latinisms, title casing, self-referentiality, overly informal style, passive voice, and other issues. Here’s an example made by Gemini, annotated by me:

It gets the work done, you say. Yes, and so does an inconsistent user interface made using Microsoft Paint. Will that help sell your product? Not really, no. This could be mitigated by enforcing a style guide on the LLM. However, my experience using Cursor says otherwise: enforcing a strict style on LLM-generated text is incredibly difficult. You need a technical writer or editor to check the text for formatting and style because the output isn’t deterministic. LLMs don’t really understand rules.

They make up things in subtle, hard-to-detect ways

LLMs lie even at the most basic level, that of auto-completing sentences. They make up things constantly in plausible and hard-to-detect ways. They may reuse an old version of a command or snippet or end up projecting the wishes contained in your prompt into something resembling truth but which, in fact, contains none. When challenged, they’ll apologize and create another lie unless directed to the correct answer through sufficient feedback and examples.

This is by design: LLMs desperately want to be helpful, meaning they’d rather provide an answer than none, even when folks fiddle with their settings. This poses a problem when it comes to the delicate matter of accountability. Who is accountable when a document tells you to run a specific command that doesn’t exist? Who’s to blame? Who will be quoted in court if money is lost due to an LLM hallucinating? Accountability is a key aspect of our work as technical writers.

They lack strategic vision, context, and drive

While they can mimic structure, LLMs lack global vision, context, and the intentionality and subjectivity inherent in information architecture work. As I said in another post, LLMs cannot step back and see the bigger picture. Even if you could feed millions of tokens to its context window, an LLM would still be unable to develop a coherent overall strategy for your documentation that could inform docs structure, content reuse, docs UI features, voice and tone, and so on.

Documentation isn’t just a pile of facts; it’s an engineered experience designed to guide users effectively. Deciding what not to include is as important as deciding what to document. They are architectural choices well beyond the capabilities of pattern recognition. Leaving this to LLMs guarantees amorphous blobs of docs released once, never to be improved in following iterations nor iteratively adjusted through research and user feedback.

They fail to capture product truth and nuance

Products are complex, hairy beasts, and so is the content that describes it. Behind every document for a mature feature are support tickets, direct customer feedback, and a certain tension caused by the motions a product goes through during development, a feeling of unfinishedness that’s hard to describe to outsiders. This tension is not known – can’t be possibly known – by an LLM unless it’s able to tap into our brains and extract the anxiety makers go through. I call it product truth.

Without this grounding in reality, docs generated by LLMs are hollow. They smile like signs posted in front of danger, oblivious to the context unfolding around them. Readers end up frustrated because the caveats, the edge cases, and the missing bits weren’t acknowledged. Docs don’t only explain and present what exists but also what you cannot do or what you shouldn’t be doing. These imperfections make docs great but require a caring, organic writer.

Augmentation? Yes. Substitution? Not gonna happen

You can replace tech writers with an LLM, perhaps supervised by engineers, and watch the world burn. Nothing prevents you from doing that. All the temporary gains in efficiency and speed would bring something far worse on their back: the loss of the understanding that turns knowledge into a conversation. Tech writers are interpreters who understand the tech and the humans trying to use it. They’re accountable for their work in ways that machines can’t be.

The future of technical documentation isn’t replacing humans with AI but giving human writers AI-powered tools that augment their capabilities. Let LLMs deal with the tedious work at the margins and keep the humans where they matter most: at the helm of strategy, tending to the architecture, bringing the empathy that turns information into understanding. In the end, docs aren’t just about facts: they’re about trust. And trust is still something only humans can build.